Symbolic expressions in F#

- Preface

- Code

- Introduction

- Expressions

- Evaluating expressions

- Formatting expressions

- Simplifying expressions

- Differentiating expressions

- Conclusion

- References

- Discussion

Preface

While writing an article on automatic differentiation, which is still in work, I started showing a simple example of how one might symbolically differentiate a function using a computer as a contrasting comparison. Such an example felt better served by a full article, and this fleshed out article will be easier to reference than the original idea of a small example in the automatic differentiation article.

Using computers to manipulate symbolic expressions is not a new idea and goes all the way back to the 1950s (see References). But hopefully this article provides some helpful insight into how this works, how simple but powerful the idea is, and how F# provides an excellent language to represent the idea in.

Code

Supporting code is provided in a few forms.

-

Polyglot Notebooks (formerly known as .NET Interactive notebooks) are provided to help clarify the code and to be followed along with the article. There are two notebooks. One contains only the final versions of the core type and function definitions, and the other contains all code and examples. Link to notebooks.

-

A GitHub repository containing a full F# project implementation of the symbolic expressions usable as a library and NuGet package. This is currently unpublished, but this article will be updated with the repository later.

Introduction

When doing mathematics, we manipulate symbolic expressions. A function definition might be given as $f(x) = \cos(x^2)$, and to differentiate this function, we perform symbolic manipulation according to the rules of single-variable calculus:

\[\begin{align*} f'(x) &= -\sin(x^2) \cdot (x^2)' \tag{$\cos' = -\sin$ and chain rule} \\ &= -2x\sin(x^2) \tag{power rule} . \end{align*}\]How would we have a computer do this for us? That is the topic of this post, and we will use F# to implement a simple system.

We would like to be able to represent an expression such as $f(x) = cos(x^2)$ in F# such that we can manipulate it in various ways, such as differentiating it. We will concentrate on functions of a single variable. An initial thought might be that we can already do this already in nearly any programming language. For example, doesn’t the following already represent a single-variable mathematical expression?

> let f x = cos(x*x);;

val f: x: float -> float

Although this is indeed a function in F#, we don’t have access to the underlying representation where we can do something with it. It is possible that the F# compiler creates an expression tree, but all we can do is pass the function a value and receive a value:

> f 2.1;;

val it: float = -0.2978016413

So we need another way to represent expressions in code. Let’s first take a look at what expressions actually are. If we inspect an expression like $\cos(x\cdot x)$, we might visually represent this expression as:

This is a tree representation, which may not be clear at first, so let’s explore it. A question to start with is “how would we calculate the function $\cos(x^2)$, conveniently rewritten as $\cos(x\cdot x)$?”. We would proceed as follows:

- Given a value for $x$,

- we would “compute” $x$ “twice” (we can think of “compute” here as simply writing down the value),

- then we would compute $x\cdot x$,

- and finally, we would compute $\cos(x\cdot x)$.

It’s easiest to traditionally think of this computation as the tree starting from the bottom, but as we will soon see, it is convenient to think of the representation as top down.

Now, how should we implement an expression representation in F# that matches this idea?

Expressions

Let’s start off with building an expression type in F# that represents simple arithmetic with the operators $+, -, *, /$. One of the ways new types are defined in F# are with discriminated unions. Discriminated unions are also referred to as sum types, which are a special case of algebraic data types. For our initial use case, we want to build expressions using $+, -, *, /$ plus variables and constants. Let’s take a look at an initial implementation of an expression type and then discuss it.

type Expression =

| X

| Constant of float

| Negation of Expression

| Sum of Expression * Expression

| Difference of Expression * Expression

| Product of Expression * Expression

| Quotient of Expression * Expression

When reading discriminated unions, it is best to read the | symbol as the word “or”, and everything to the right of the | are called case identifiers which define value constructors for the type. So here, we are defining an Expression to be a variable X, a constant that consists of a float value, an Addition constructor that consists of two expressions, etc. When we see a type like 'T * 'U, this is a product type and are tuples in F#, and it is again an example of an algebraic data type. It is best to read * as “and”.

To create values of type Expression, we use the constructors defined by the case identifiers. Here’s some example expressions entered into FSI:

> X;;

val it: Expression = X

> Constant 2.0;;

val it: Expression = Constant 2.0

> Product (X, X);;

val it: Expression = Product (X, X)

> Product (Constant 4.1, X);;

val it: Expression = Product (Constant 4.1, X)

> Quotient (Constant 1.0, Sum (X, Constant 2.0));;

val it: Expression = Quotient (Constant 1.0, Sum (X, Constant 2.0))

> Quotient (Constant 1.0, Difference (Sum (X, Constant 2.0), Quotient (Constant 1.0, X)));;

val it: Expression =

Quotient

(Constant 1.0,

Difference (Sum (X, Constant 2.0), Quotient (Constant 1.0, X)))

A few things to note:

-

Expressions are terminated with

;;in FSI, but these are not required in normal F# modules or notebooks. This is simply so that you can enter multiple lines in FSI by pressing enter, which won’t terminate the expression you’ve entered until you write;;and hitEnter. -

A

floatvalue does not directly represent a value of typeExpression. A constant expression value must be constructed viaConstant, which has typefloat -> Expression. That means thatConstantis a function that takes a value of typefloatand returns a value of typeExpression. -

The variable constructor

Xtakes no argument. It is of typeExpression, which means it directly represents a value of typeExpression. We could have chosen another name likeVariableorYor anything. -

The constructors

Sum,Difference,Product, andQuotientare recursive. They take as arguments two values of typeExpressionand return a new value of typeExpression. This is what allows us to build an expression tree with these constructors. -

The convention in F# is to name case identifiers with the PascalCase capitalization convention.

-

While more verbose, the case identifier names are not abbreviated for clarity, which should be prioritized almost always when writing programs. This is at the cost of verbosity when entering expressions, but it is important to note that the expressions are there for the purposes of being represented and not for readability. The definition of

Expressionis readable, and that’s really all that matters because it enables clean code when pattern matching against anExpressionvalue. It will be rare to have to be reading expression values often. When we want to, we can introduce anExpressionprinter, and we do so below.

The last example expression is a bit complex, so let’s take a look at it. It represents the expression:

\[\begin{equation} \frac{1}{(x + 2) - 1/x}, \end{equation}\]where parentheses are there to clarify the order of operations that we specified when entering the F# expression, and it has an expression tree of:

Stare at this a bit, referencing the normal expression above. This is using the normal operator and variable names though. But our Expression type is a representation of an expression tree (that’s its power), and if we use our nomenclature of the Expression type, the expression tree is:

The complete implementation of Expression with more expressions like $\sin, \cos$, etc. looks like:

type Expression =

| X

| Constant of float

| Negation of Expression

| Sum of Expression * Expression

| Difference of Expression * Expression

| Product of Expression * Expression

| Quotient of Expression * Expression

| Sine of Expression

| Cosine of Expression

| Tangent of Expression

| Exponential of Expression

| Logarithm of Expression

| Power of Expression * exponent: int

with

static member C (x: int) = Constant x

static member C (x: float) = Constant x

static member (~-) expression = Negation expression

static member (+) (e1, e2) = Sum (e1, e2)

static member (-) (e1, e2) = Difference (e1, e2)

static member (*) (e1, e2) = Product (e1, e2)

static member (/) (e1, e2) = Quotient (e1, e2)

static member Sin e = Sine e

static member Cos e = Cosine e

static member Tan e = Tangent e

static member Exp e = Exponential e

static member Log e = Logarithm e

static member Pow e n = Power (e, n)

The additional expressions should be self-explanatory based on the discussion above since they just provide some additional built-in function expressions, but what about all those static member declarations? The first two provide two separate shorthands for creating constants, such as C 1 or C 3.1 instead of Constant 1.0 or Constant 3.1, respectively. The next five declarations are examples of operator overloading in F#. In F#, these operators are infix. That is, you call them like 2 + 3. However, you can use them as prefix, i.e. just a normal function, by putting the operator in parentheses, like (+) 2 + 3. Check out the type in FSI:

> (+);;

val it: (int -> int -> int) = <fun:it@4>

By declaring static members for these operators, we are overloading them for our Expression type. This allows us to more easily enter expressions, as we normally would with arithmetic expressions. It is good to point out here that not only have we created an Expression representation in F#, but we have also created an Expression language with a sort of domain specific language (DSL) that allows easier creation of Expression values. This is a very powerful aspect when working in F# and modeling domains with types.

Here’s some more examples:

> Product (Sine X, Cosine X);;

val it: Expression = Product (Sine X, Cosine X)

> Sine X * Cosine X;;

val it: Expression = Product (Sine X, Cosine X)

> Product (Sine X, Cosine X) = Sine X * Cosine X;;

val it: bool = true

Note the operator precedence and also that we get structural equality for free, as shown in the last example. The other static member declarations for Sin, Cos, and so on are normal static members. Normally, their use would require using the type name, such as

> Sin X;;

Sin X;;

^^^

stdin(29,1): error FS0039: The value or constructor 'Sin' is not defined. Maybe you want one of the following:

sin

Sine

sinh

sign

asin

> Expression.Sin X;;

val it: Expression = Sine X

However, if we use a special import declaration, then we can use them more fluidly.

> open type Expression;;

> Sin X;;

val it: Expression = Sine X

Now, we can enter expressions rather easily, almost identically to how we would by hand or when defining normal functions in F#.

> Cos (Log X) / X;;

val it: Expression = Quotient (Cosine (Logarithm X), X)

As mentioned before, it is fair to call this shorthand an expression language, although our underlying representation defined by Expression’s value constructor is also an expression language.

This is already pretty slick, and we’ve barely written any code! All we’ve done is basically write down our type. This is a good moment to pause and reflect on the declarative nature of F# and similar languages and the value of domain-driven design. We are simply declaring and describing our domain with F#’s syntax and semantics. By doing so, the problem we’re faced with, which is representing, evaluating, simplifying, and differentiating expressions, is going to basically just “fall out” of our type definitions. The dialects of ML (short for Meta Language) such as Standard ML (SML), OCaml, and F# excel at this type of thing, as do Lisp and Scheme dialects (Common Lisp, MIT/GNU Scheme, Racket, Chez Scheme, etc.).

This is where it starts getting exciting, so let’s take a look at how to evaluate our new expressions.

Evaluating expressions

Right now, we have the ability to define new expressions and bind them, but how do we evaluate them? That is, if we have $f(x) = \sin x + \cos x$, how do we find $f(2)$ in our new expression language?

> let f = Sin X + Cos X;;

val f: Expression = Sum (Sine X, Cosine X)

Note that this is different than let f x = sin x + cos x, which is an F# function using the built-in sin and cos functions, so we can’t just pass in a value, say 2, to this f and get back an answer. We have defined symbolic expressions that will allow us to easily manipulate them. This will be especially apparent in the simplifying and differentiating sections, but evaluating expressions will give us some insight into how we do all of that.

Let’s first consider a simple case of just sum or product expressions. Assume our Expression type was only the following:

type Expression =

| X

| Sum of Expression * Expression

This is for illustrative purposes only. Now consider the expression Sum (X, X). How do we evaluate this expression at say 2? There are two questions here: (1) how do we evaluate X at 2, and then how do we evaluate Sum (X, X) at 2. We will use F#’s powerful pattern matching functionality. Addressing, the first question:

let evaluate (a: float) expression =

match expression with

| X -> a

This is an F# function named evaluate that takes a float and an Expression and returns a float representing the evaluation of the expression at a. The match expression will search the options (in this case, there’s only one) until a match occurs. So when expression is just X, the pattern clause for it matches and returns the thing to the right of the ->. Thus, when evaluating the expression X at a, we simply return the value a. We should note here that F# will actually complain, rightfully so, about the match above. That’s because we have not covered all the possible values of expression, which could be an Expression constructed by Sum. This is called exhaustive pattern matching, and it is incredibly vital because F# will check and warn when not all possible values of a type have been accounted for in a match expression.

Let’s tackle that now, since we need to know what Sum (X, X) is.

let evaluate (a: float) expression =

match expression with

| X -> a

| Sum (e1, e2) -> // <?>

We’ve added a clause for Sum, but left its evaluation as a question for now. What do we mean by Sum (e1, e2)? Aren’t we trying to evaluate Sum (X, X)? Yes, we are, but to do so, we don’t want to hamstring ourselves by assuming that Sum will always be summing together X with itself. Indeed, our definition of Sum shows that it generally takes two arbitrary expressions. Recall that the type is Sum : Expression * Expression -> Expression. So, that’s what we pattern match on in general. By pattern matching against Sum (e1, e2), in a successful match, F# will take a Sum expression and bind its first expression to e1 and its second expression to e2. These can then be used in the result expression. And to be clear, both e1 and e2 are of type Expression, because that’s how Sum is declared.

With this knowledge, what should the evaluation of Sum (e1, e2) be? One guess could be e1 + e2. But in fact, that is identical to Sum (e1, e2) since we overloaded + for expressions and e1 and e2 could be any expression. What we really need to do is sum up the evaluated values of both e1 and e2 at a. Since that’s the very function we’re trying to build, that means recursion is needed! That is, we will call evaluate for both e1 and e2, separately, at a and then sum up the result.

let rec evaluate (a: float) expression =

match expression with

| X -> a

| Sum (e1, e2) -> (evaluate a e1) + (evaluate a e2)

A couple of notes. In F#, we need to use the rec keyword to mark a function as being recursive (i.e., it calls itself in its definition). The + on the right hand side of the Sum pattern is the + for float and not for Expression, because evaluate returns a float value. It’s entire point of existing is to return an actual float value.

In general, the game here is to pattern match against all possible values of Expression and then use standard F# functions and operators in the result expressions to return an actual number. So we here is where we are mapping between the Expression type and the underlying F# representations for all of the Expression cases.

Here’s the final implementation of evaluate.

let rec evaluate a expression =

match expression with

| X -> a

| Constant f -> f

| Negation e -> evaluate a (Product (Constant -1.0, e))

| Product (e1, e2) -> (evaluate a e1) * (evaluate a e2)

| Quotient (e1, e2) -> (evaluate a e1) / (evaluate a e2)

| Sum (e1, e2) -> (evaluate a e1) + (evaluate a e2)

| Difference (e1, e2) -> (evaluate a e1) - (evaluate a e2)

| Sine e -> sin (evaluate a e)

| Cosine e -> cos (evaluate a e)

| Tangent e -> tan (evaluate a e)

| Exponential e -> exp (evaluate a e)

| Logarithm e -> log (evaluate a e)

| Power (e, c) -> pown (evaluate a e) c

-

Constant float: In pattern matching we match the binding variablefagainst thefloat(as in the type) value coming in. Then evaluating aConstantis just returning the underlyingfloatvalue. -

Sine,Cosine, etc.: These functions similar simply evaluate down to using F#’s built-in versions of these that operate onfloats, recursively evaluating the inner expressions.

Some examples for $f(x) = \sin (\cos (2 \tan 3))$, $g(x) = (\cos x \cdot \sin x) / (2 e^{1/x})$, and $h(x) = \cos^2 x + \sin^2 x$ are below.

> let f = Sine (Cosine (Product (Constant 2.0, Tangent (Constant 3.0))));;

val f: Expression =

Sine (Cosine (Product (Constant 2.0, Tangent (Constant 3.0))))

> printfn "Normal statement: %A\nEvaluated expression: %A" (sin (cos (2.0 * tan 3.0))) (evaluate 0 f);;

Normal statement: 0.8189824524

Evaluated expression: 0.8189824524

val it: unit = ()

> let g = (Cos X) * (Sin X) / ( (C 2) * Exp( C 1 / X));;

val g: Expression =

Quotient

(Product (Cosine X, Sine X),

Product (Constant 2.0, Exponential (Quotient (Constant 1.0, X))))

> evaluate 1.0 g;;

val it: float = 0.08362795731

> let h = Pow (Cos X, 2) + Pow (Sin X, 2);;

val h: Expression = Sum (Power (Cosine X, 2), Power (Sine X, 2))

> evaluate 1.0 h;;

val it: float = 1.0

> evaluate 2.0 h;;

val it: float = 1.0

> evaluate 3.0 h;;

val it: float = 1.0

Hmm, this last example is intriguing. Recalling trigonometric identities, $h(x) = \cos^2 x + \sin^2 x = 1$. Although our evaluation is working correctly, what about trying to encode such a simplification such that we don’t need to continually evaluate such a constant expression? The answer is more recursive pattern matching.

Formatting expressions

Before we move on to simplifying expressions, let’s first build a pretty printer for our expressions. So far, we have the ability to more easily create expressions via the more natural syntax provided by our static member overloads and definitions, such as

> C 1 - Pow (Cos X, 2);;

val it: Expression = Difference (Constant 1.0, Power (Cosine X, 2))

But it would be nice if we could display expressions in a more natural looking form. The full on Expression language we defined is great for pattern matching, and is actually the only option there since we can only use the type constructors for pattern matching, but how about just something like 1 - cos^2 x for the above expression? To do this, we simply follow the pattern that we did above for evaluating, except instead of deconstructing an Expression to a float value, we want to deconstruct the Expression to a string value. So for every expression constructor, we want to define a pattern clause or clauses that returns a string for a more nominal string representation of the representative mathematical expression.

Here is the full pretty formatter used in this article:

let rec format expression =

match expression with

| X -> "x"

| Constant 1.0 -> "1"

| Constant float -> string float

| Negation e -> sprintf "-%s" (format e)

| Product (Constant 1.0, e2) -> format e2

| Product (e1, Constant 1.0) -> format e1

| Product (Constant c, e2) -> sprintf "%s%s" (string c) (format e2)

| Product (e1, Constant c) -> format (Product (Constant c, e1))

| Product (e1, e2) -> sprintf "(%s * %s)" (format e1) (format e2)

| Quotient (e1, e2) -> sprintf "(%s / %s)" (format e1) (format e2)

| Sum (e1, e2) -> sprintf "(%s + %s)" (format e1) (format e2)

| Difference (e1, e2) -> sprintf "(%s - %s)" (format e1) (format e2)

| Sine e -> sprintf "sin(%s)" (format e)

| Cosine e -> sprintf "cos(%s)" (format e)

| Tangent e -> sprintf "tan(%s)" (format e)

| Exponential e -> sprintf "exp(%s)" (format e)

| Logarithm e -> sprintf "log(%s)" (format e)

| Power (e, 0) -> "1"

| Power (e, 1) -> format e

| Power (Sine e, n) -> sprintf "sin^%d(%s)" n (format e)

| Power (Cosine e, n) -> sprintf "cos^%d(%s)" n (format e)

| Power (Tangent e, n) -> sprintf "tan^%d(%s)" n (format e)

| Power (e, n) -> sprintf "%s^%d" (format e) n

which has type expression: Expression -> string. It takes an Expression and returns a string consisting of the formatted Expression.

An example:

> Pow (Cos (C 1 - C 2 * X), 2) |> format;;

val it: string = "cos^2((1 - 2x))"

-

We use

sprintfallows us to functionally format values. Note that it doesn’t actually print to a console or anything. It simply returns a formatted string value. -

We again recursively operate by simplifying what we can and putting that into an

sprintfformat string, and then continue to print the sub-expressions. -

Special cases are handled as appropriate. Note that such a formatter could keep going to keep adding more niceties.

-

Such a formatting scheme results in redundant parentheses, which isn’t necessarily an easy problem to address. One would need to encode in our pattern matching some knowledge about operation precedence.

-

The

formatfunction has no side effects. It has the typeExpression -> string. It is always beneficial in F# to have so-called pure functions, functions that do not have side effects such as writing to a file or printing to the console, so that they may be easily tested and debugged. Sinceformatalways gives astring, we can always pipe it into a function that writes it to the console, for example.

Lastly, might even be useful to implement a $\LaTeX$ pretty formatter!

let rec formatAsLaTeX expression =

match expression with

| X -> "x"

| Constant 1.0 -> "1"

| Constant float -> string float

| Negation e -> sprintf "-%s" (formatAsLaTeX e)

| Product (Constant 1.0, e2) -> formatAsLaTeX e2

| Product (e1, Constant 1.0) -> formatAsLaTeX e1

| Product (Constant c, e2) -> sprintf "%s%s" (string c) (formatAsLaTeX e2)

| Product (e1, Constant c) -> format (Product (Constant c, e1))

| Product (e1, e2) -> sprintf @"(%s \cdot %s)" (formatAsLaTeX e1) (formatAsLaTeX e2)

| Quotient (e1, e2) -> sprintf @"\frac{%s}{%s}" (formatAsLaTeX e1) (formatAsLaTeX e2)

| Sum (e1, e2) -> sprintf "(%s + %s)" (formatAsLaTeX e1) (formatAsLaTeX e2)

| Difference (e1, e2) -> sprintf "(%s - %s)" (formatAsLaTeX e1) (formatAsLaTeX e2)

| Sine e -> sprintf @"\sin(%s)" (formatAsLaTeX e)

| Cosine e -> sprintf @"\cos(%s)" (formatAsLaTeX e)

| Tangent e -> sprintf @"\tan(%s)" (formatAsLaTeX e)

| Exponential e -> sprintf "e^{%s}" (formatAsLaTeX e)

| Logarithm e -> sprintf @"\log(%s)" (formatAsLaTeX e)

| Power (e, 0) -> "1"

| Power (e, 1) -> formatAsLaTeX e

| Power (Sine e, n) -> sprintf @"\sin^%d(%s)" n (formatAsLaTeX e)

| Power (Cosine e, n) -> sprintf @"\cos^%d(%s)" n (formatAsLaTeX e)

| Power (Tangent e, n) -> sprintf @"\tan^%d(%s)" n (formatAsLaTeX e)

| Power (e, n) -> sprintf "%s^{%d}" (formatAsLaTeX e) n

An example:

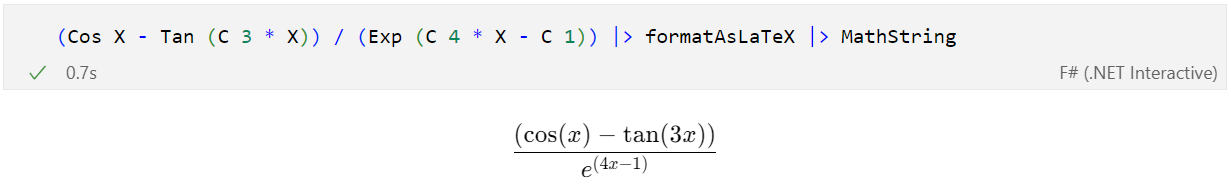

> (Cos X - Tan (C 3 * X)) / (Exp (C 4 * X - C 1)) |> formatAsLaTeX;;

val it: string = "\frac{(\cos(x) - \tan(3x))}{e^{(4x - 1)}}"

The output looks like $\displaystyle \frac{(\cos(x) - \tan(3x))}{e^{(4x - 1)}}$ when rendered with $\LaTeX$. In fact, Polyglot Notebooks have a function provided called MathString : latexCode: string -> unit that takes a string consisting of $\LaTeX$ and renders it directly in the notebook! Here’s a screenshot of a notebook cell using this feature:

It should be clear by now that F# is a wonderful language, with piping |> feeling very fluid, and that Polyglot Notebooks with F# are a wonderful way to explore a topic. The declarative nature of our types, functions, and usage of them feels makes it such that we just write out “sentences” for what we would like to happen.

Simplifying expressions

We are almost there with regards to differentiation, but we’re going to tackle simplifying expressions first. This will come in handy since before and after differentiating a function, it is likely not be in an expected simplified form.

Despite the name, simplifying expressions is the most complex out of everything in this article. There are a couple reasons for this. One is that the problem itself is not well defined. That is, there is not a deterministic stopping point at which an expression is at its “simplest”, so it’s not clear when to stop simplifying. There is even a possibility of infinite oscillation between two simplified forms, such that one simplification rule takes us to one expression that then gets simplified back to the prior expression. So determining appropriate stopping conditions is the first problem. The second related problem is a sort of decision problem. For example, suppose we have the expression $x^2-4$. This seems simplified to me. But now suppose that we have the expression

\[\begin{equation} \frac{x^2-4}{x-2} . \end{equation}\]Now, if we consider the numerator simplified as before, then this expression may be considered to be simplified. However, if we expand the numerator, then a further simplification becomes apparent.

\[\begin{equation} \frac{x^2-4}{x-2} = \frac{(x+2)(x-2)}{x-2} = x+2, \text{ when $x\neq 2$} . \end{equation}\]So in this case, expanding the numerator from a simplified form, actually enabled us to further simplify the whole expression, but note that we also need to keep track of values for which the simplification does not hold. Hopefully, it’s becoming quickly apparent that, even for simple expressions, creating a simplification algorithm is not an easy thing.

Other associated issues are operator, constant, and variable precedence. In our definition of Expression, we are only allowing a single variable expression of X. As we mentioned before, we could create a case identifier of Variable of name: string such that we would have multiple variables. We could even have the situation of constant variables such as $a$ in something like $f(x) = 2ax$, where $a\in\mathbb{R}$. So, we would have to make some decisions, such as some lexical ordering for an expression like $f(x,y,z) = yx2byza2$, where $a,b\in\mathbb{R}$, to be simplified down to $f(x,y,z) = 4abxy^2z$. Such a lexical ordering rule would be something like constants first, then constant variables next ordered alphabetical, and then varaibles, again ordered alphabetically. Throw in other functions like the trigonometric functions and such, and we have again demonstrated that things get complicated quickly.

The good news is that we are not going to address any of this. We are going to have a simplify function that is relatively simple and mainly takes care of easily simplified pieces. This will suffice for our uses, which is to mainly showcase symbolic differentiation.

We will cover some of the details below, so here’s the full simplify function:

let simplify expression =

let rec simplifier expression =

match expression with

| Sum (Constant c1, Constant c2) -> Constant (c1 + c2)

| Sum (X, X) -> Product (Constant 2, X)

| Sum (Constant 0.0, e2) -> simplifier e2

| Sum (e1, Constant 0.0) -> simplifier e1

| Sum (Product (Constant c1, X), Product (Constant c2, X)) ->

simplifier (Product (Sum (Constant c1, Constant c2), X))

| Sum (Power (Cosine X, 2), Power (Sine X, 2)) -> Constant 1.0

| Product (X, X) -> Power (X, 2)

| Product (Constant 1.0, e2) -> simplifier e2

| Product (Constant 0.0, _) -> Constant 0

| Product (e1, Constant c) -> simplifier (Product (Constant c, e1))

| Constant _ as e -> e

| X -> X

| Negation e -> Negation (simplifier e)

| Product (e1, e2) -> Product (simplifier e1, simplifier e2)

| Quotient (e1, e2) -> Quotient (simplifier e1, simplifier e2)

| Sum (e1, e2) -> Sum (simplifier e1, simplifier e2)

| Difference (e1, e2) -> Difference (simplifier e1, simplifier e2)

| Sine e -> Sine (simplifier e)

| Cosine e -> Cosine (simplifier e)

| Tangent e -> Tangent (simplifier e)

| Exponential e -> Exponential (simplifier e)

| Logarithm e -> Logarithm (simplifier e)

| Power (e, 0) -> e

| Power (e, 1) -> simplifier e

| Power (e, n) -> Power (simplifier e, n)

// Run the simplifier five times to try and catch most simplifications

expression |> simplifier |> simplifier |> simplifier |> simplifier |> simplifier

Again, we are following the procedure of matching against various combinations of the Expression type’s value constructors, and then providing how that particular match should be simplified. For example, we know that for any $x$ we have $0 + x = x + 0 = x$, where $x$ could even be another expression. This is encoded by the two match clauses

| Sum (Constant 0.0, e2) -> simplifier e2

| Sum (e1, Constant 0.0) -> simplifier e1

These collapse the expressions on the left to the non-zero portions and then further simplifies those expressions.

For such a simplifier, we can get as detailed and complex as we’d like, providing simplification rules for general expressions but also specific expressions like the trigonometric identities. One such example that we have included in our simplifier is the fact that $\cos^2 +\sin^2 x = 1$, which could be matched and simplified by

| Sum (Power (Cosine X, 2), Power (Sine X, 2)) -> Constant 1.0

But would this catch an expression like $\cos^2(x + 0) + \sin^2(0 + x)$ and simplify it to $1$? It would not, because in this particular pattern match, we’re only matching if Cosine and Sine contain an X expression. We could extend our match with a when clause which allows us to add an additional boolean clause to the pattern match. For the clause to fully match, both the pattern match needs to match and the boolean clause needs to return true. So, what about the following?

| Sum (Power (Cosine e1, 2), Power (Sine e2, 2)) when e1 = e2 -> Constant 1.0

This looks good, because we will match whenever Cosine and Sine contain the same expression. And unlike regular functions in F#, we can directly compare expressions because they are just values. Again, this is termed structural comparison or structural equality. But does this solve the original question of properly simplifying an expression representing $\cos^2(x + 0) + \sin^2(0 + x)$ down to $1$? The answer is no, and that’s because X + C 0 does not equal C 0 + X.

> X + C 0 = C 0 + X;;

val it: bool = false

However, we have already discussed simplifying such expressions and the simplification shows that both expressions simplify down to X and are thus equal. So, all we need to do is simplify the two expressions before comparing them using our local simplifier function. We could make simplify recursive and use that instead, but we’re just going to keep it simple and use simplifier. The final clause is:

| Sum (Power (Cosine e1, 2), Power (Sine e2, 2)) when simplifier e1 = simplifier e2 -> Constant 1.0

Why do we repeatedly call simplifier five times? The reason is that a single pass of simplifier might not be enough.

Question: Before moving on, can you think of an expression where simplifier would return but not have fully simplified the expression before returning if simplifier was just called once on an expression?

What about the expression $(0\cdot x + 2)x + 3x$ represented by the following?

> (C 0 * X + C 2) * X + C 3 * X;;

val it: Expression =

Sum

(Product (Sum (Product (Constant 0.0, X), Constant 2.0), X),

Product (Constant 3.0, X))

This should simplify down to $0$, but if we called simplifier on it just once, we would get

> (C 0 * X + C 2) * X + C 3 * X |> simplifier |> format;;

val it: string = "(((0 + 2) * x) + 3x)"

(Note that simplifier isn’t a public function. This is just to help showcase the example.) Why did this happen? Because in just one pass of simplifier, we eventually hit the case of C 0 * X that matches against Product (Constant 0.0, _) and just returns Constant 0. Then simplifier doesn’t have a mechanism built-in to go back up the tree and simplify 0 + C 2 to just C2, and so on. To do so would be an interesting problem for sure, but for this implementation, we’ll just repeat simplifier an arbitrary amount of five times and hope that catches most of the cases we’ll use for now.

Exercise: Add a pattern match clause that simplifies expressions like $e^{\log f(x)}$ and $\log(e^{f(x)})$ to $f(x)$.

Exercise: Add a pattern match clause that simplifies an expression like $1-\sin^2 \text{<expression>}$ to $\cos^2 \text{<expression>}$.

Exercise: Add a pattern match clause that simplifies expressions like $xxx$, $xx^2$, and $x^2x$ to $x^3$.

Exercise: Add a pattern match clause that simplifies expressions like $\text{<expression>} - \text{<expression>}$ and $\text{<expression>} + (-\text{<expression>})$ to $0$. Concrete examples that should work with this new pattern match clause and our existing simplification scheme are $x-x = 0$, $xx-x^2 = 0$, $\cos x + (-\cos x)$. Note that the last example could be created, using our expression language, as Cos X + -(Cos X).

> Cos X + -(Cos X);;

val it: Expression = Sum (Cosine X, Negation (Cosine X))

Differentiating expressions

We are finally here! And I am sorry to disappoint you, as it is somewhat anticlimactic. The reason is that we’ve already played this game several times by this point. In other words, to differentiate an expression, we simply pattern match against possible Expression values, and then return a differentiated expression according to the normal rules of calculus. So it’s the same thing we’ve been doing, but now we just need to encode the rules of differentiation.

Recalling the differentiation rules of calculus and that $f’(x)$ or simply $f’$ means the derivative of $f$, we have:

\[\begin{align*} \text{Constants: } && (c)' &= 0, \text{ when } c\in\mathbb{R} \\ \text{Multiplication by constant: } && (c\cdot f(x))' &= c \cdot f'(x), \text{ when } c\in\mathbb{R} \\ \text{Power rule: } && (x^n)' &= n\cdot x^{n-1} \\ \text{Sum/Difference rule: } && \big[ f(x) \pm g(x) \big]' &= f'(x) \pm g'(x) \\ \text{Product rule: } && (f\cdot g)'(x) &= f'(x)g(x) + f(x)g'(x) \\ \text{Quotient rule: } && (f/g)'(x) &= \frac{f(x)\cdot g'(x) - f'(x)\cdot g(x)}{g^2(x)} \\ \text{Chain rule: } && (f \circ g)'(x) &= f'(g(x)) \cdot g'(x) \\ \text{Exponential: } && \big[ e^{f(x)} \big]' &= e^{f(x)} \cdot f'(x) \\ \text{Logarithm: } && \big[ \log f(x) \big]' &= \frac{1}{f(x)} \cdot f'(x) \\ \text{Sine: } && \sin' &= \cos \\ \text{Cosine: } && \cos' &= -\sin \\ \text{Tangent: } && \tan' &= \sec^2 = \frac{1}{\cos^2} \end{align*}\]There are of course many more differentiation rules and results, but these are all that we’ll be dealing with here. Now that we’ve written these down, we are basically done. All we have to do is encode these rules into a function with pattern matching, and since our expression language is so well built for this, we just convert these rules directly into our expression language.

let differentiate expression =

let rec D expression =

match simplify expression with

| Constant _ -> Constant 0

| X -> Constant 1

| Negation e -> Negation (D e)

| Product (e1, e2) -> Sum (Product (D e1, e2), Product (e1, D e2))

| Quotient (e1, e2) -> ((D e1 * e2) - (e1 * D e2)) / Power (e2, 2)

| Sum (e1, e2) -> Sum (D e1, D e2)

| Difference (e1, e2) -> Difference (D e1, D e2)

| Sine e -> Product (Cosine e, D e)

| Cosine e -> Product (Negation (Sine e), D e)

| Tangent e -> Product (Product (Quotient (Constant 1, Cosine e), Quotient (Constant 1, Cosine e)), D e)

| Exponential e as exp -> Product (exp, D e)

| Logarithm e -> Product (Quotient (Constant 1, e), D e)

| Power (e, n) -> Product (Product (Constant n, Power (e, n-1)), D e)

D expression |> simplify

As you can see, this is practically identical to the rules we wrote out above using D as a sort of differentiation operator, just how you might use $d/dx$ in calculus instead of the $’$ notation. Go over them to convince yourself and to check that they’re right. The only thing that may be viewed as an extra case is the rule

| X -> Constant 1

We need it due to how the Expression type is defined since we need to cover all possible values of Expression. And note that we simplify the expression both before and after the differentiation process to try and return simple expressions.

Let’s try it out. Let’s differentiate $f(x) = \cos(x^2)$.

> let f = Cosine (Product (X, X));;

val f: Expression = Cosine (Product (X, X))

> differentiate f;;

val it: Expression =

Product (Negation (Sine (Power (X, 2))), Product (Constant 2.0, X))

> differentiate f |> format;;

val it: string = "(-sin(x^2) * 2x)"

If we did the differentiation by hand, we probably would have ended up with $f’(x) = -2x\sin(x^2)$, so this is great!

As I mentioned, this is a bit anti-climactic since we have went over and over this pattern matching process on expressions. But, this is still really quite amazing at the same time, because we are now easily differentiating arbitrary expressions as long as they are definable by our expression language. And we could continue adding more expression types. Then F# will warn us that all of our pattern matches have incomplete matches, which is a fantastic feature that lets us know where we should add in new match clauses for the new Expression value constructors. And all in all, this is all implemented in just 10s of lines of F# code.

Exercise: Define some of your own expressions and differentiate them. Are they correct when you verify them by hand? How close are the simplified forms to what you get by hand?

Exercise: Try out what we mentioned about adding a new function. For example, try adding a new expression case identifier of Secant of Expression, and then add a new static member Sec and update the evaluate, format, simplify, and differentiate functions to handle this new case.

Conclusion

What we have ended up with is a collection of F# types and functions that provide:

-

An

Expressiontype allowing arbitrary expressions to be represented. The expressions are only constrained by the defined case identifiers, but those can be easily expanded to include new special functions. -

A shorthand expression language, defined using operator overloads and static member functions that can be imported via

open type Expression, that allows us to easily define expressions similarly to how we might define them by hand or define normal arithmetic functions in F#. -

The functions

evaluate,format,formatAsLaTeX,simplify, anddifferentiatethat operate on expressions of typeExpressionand do exactly as their name implies. -

A purely functional interface in that all functions take a value and return a value, meaning that these functions can be piped, expressions can be manipulated and created with other F# functions such as list comprehensions, list functions, etc. As an example, the following function would generate the function

f(x) = x^n:> let xToTheNthPower n = List.fold (fun acc _ -> Product (X, acc)) X [1..n-1];; val xToTheNthPower: n: int -> Expression > xToTheNthPower 1;; val it: Expression = X > xToTheNthPower 2;; val it: Expression = Product (X, X) > xToTheNthPower 3;; val it: Expression = Product (X, Product (X, X)) > xToTheNthPower 4;; val it: Expression = Product (X, Product (X, Product (X, X))) > xToTheNthPower 5;; val it: Expression = Product (X, Product (X, Product (X, Product (X, X))))

This is all really quite amazing because now, we have taught F# (i.e., a computer!) how to represent symbolic expressions, how to evaluate them, how to simplify them, how to format them and even format them as $\LaTeX$, and then ultimately differentiate them! And all this is done via relatively simple symbolic rules, we have encoded calculus as F# types and functions!

Lastly, we should reflect upon just how easy a language like F# makes this idea realizable and communicable to other humans and developers. One might even consider that this could supplement calculus teaching. With almost no effort at all, we can now write programs that do all sorts of symbolic manipulations. And we really don’t have to stop here. We could continue building up pieces of calculus, expanding to multi-variate functions, and even to integration. Although symbolic integration is a much harder problem, just as it is when we do it by hand (which is really just human symbolic manipulation). There could even be solvers. These are basically the humble beginnings of a system like Mathematica. Although what we have built here is simple, it is still powerful and useful and can be expanded. You could use this in programs right now, and with some targeted extensions, it would keep increasing in usefulness.

This whole business is a powerful and beautiful example of simple things doing complex things.

Exercise: Define a composition case identifier in the Expression type definition and propagate that throughout the functions we defined. Note that we already handle implicit composition, but we want to handle it more generally. For example, it would be nice to be able to do the following:

> let f = X * X;;

val f: Expression = Product (X, X)

> let g = Cos X;;

val g: Expression = Cosine X

> let f_of_g = Compose (f, g);;

???

In other words, Compose of f: Expression * g: Expression would represent the composition of functions $f\circ g$. Maybe even add an operator overload, such as @@ to represent composition. Note that this is actually not as easy as it sounds, so have fun playing with it. This article may be updated in the future with such an addition.

Exercise: Update Sum, Difference, Product, and Quotient to take an Expression list instead of just an Expression * Expression tuple. This would mean that the expression language would operate somewhat similarly to how arithmetic expressions in Lisp and Scheme work, such as (+ 1 2 3 4) returning 10. Do you prefer keeping things as the Expression * Expression tuple or the Expression list?

References

-

Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I by John McCarthy

- Copy of an original memo by McCarthy from March 1959

- Typeset version of paper published in April 1960

- Note: although originally planned, there is no part II.

-

Functional Programming Using F# by Michael R. Hansen and Hans Rischel

- Section 6.2 : Symbolic differentiation, pp. 127-131

-

Structure and Interpretation of Computer Programs by Harold Abelson and Gerald Jay Sussman with Julie Sussman

- Section 2.3.2: Symbolic Differentiation: pp. 145-151

- Course presentations on the topic by Gerald Jay Sussman himself (the full lectures by both Abelson and Sussman are an absolute must):

- Paradigms of Artificial Intelligence Programming: Case Studies in Common Lisp by Peter Norvig